At the 2024 Society for Scholarly Publishing’s annual meeting hosted in Boston, TNQTech presented a breakout session titled ‘The coordinated dance between AI and human-intelligence for optimal outcomes’. In this note I’m summarising the most interesting things we covered; the recorded video at the end of this page explains it all in more detail.

Abhi was joined by Neel in exploring the scenarios in which we should be using AI, and the two approaches that broadly categorise those types of interactions. We explore leveraging AI for optimal business outcomes in a manner that is sustainable, ethical, and practical.

The premise of the presentation rests on our belief that we should not use technology just because it is available. Instead, we should use technology when we can be sure that it is useful and safe to use, and when the value is clear and apparent.

“The intuitive mind is a sacred gift and the rational mind is a faithful servant. We have created a society that honors the servant and has forgotten the gift.”

Albert Einstein

With the relevance of AI increasing, the question of human relevance becomes more pressing. Why is human involvement indispensable? An easy answer to this question is to look at the difference between AI and human beings. AI lacks creativity, intuition, and emotional intelligence. Underpinning AI is statistics, making predictions based on data and patterns, finding logic and reason that exists within the data and patterns. However, AI lacks nuance and the ability to make decisions that combine data or facts with context and intuition. It lacks out of the box thinking, quite literally! While future advancements will only make AI even more sophisticated with better rational outcomes, it is unlikely to replace humans for these reasons.

Another path by which we can examine how relevant we are in the world of AI is to ask if we can trust AI in the way we trust people. There are two arguments that explain the prevalent view of the matter of trust: The biases that plague AI systems lead people to think of AI as untrustworthy, and where the human ability to explain our decision-making builds trust and accountability, AI’s inability to do so fosters mistrust. It may be safe to assume that trust in AI’s output is likely to remain questionable in the foreseeable future.

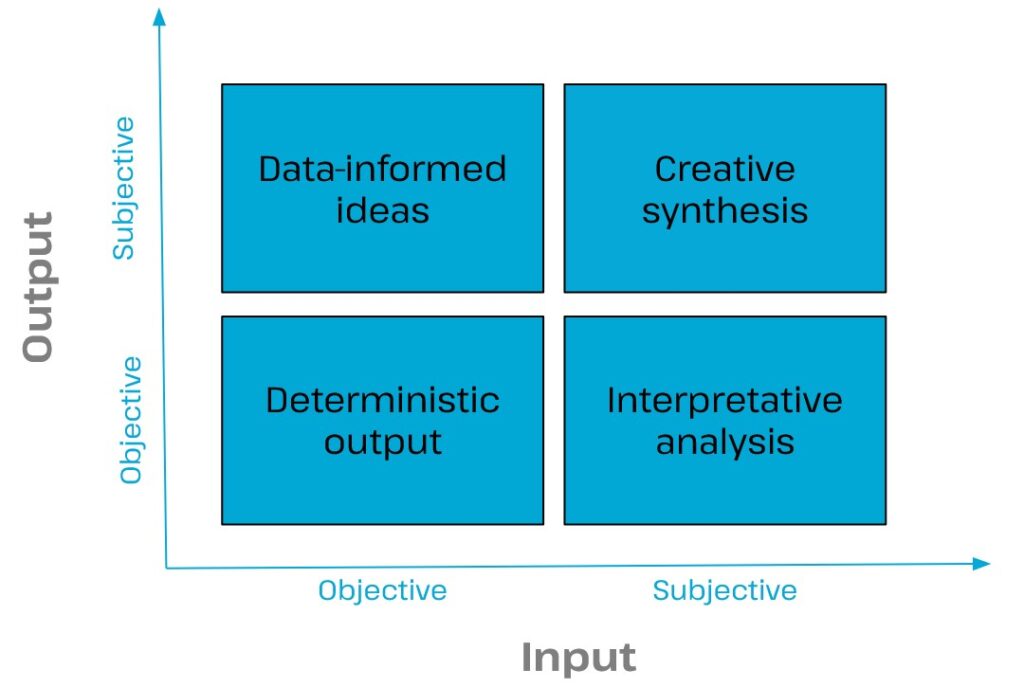

The Dialogue Dynamic Grid (DDG) is a framework that has helped us understand AI better. In simple terms, it creates broad classifications based on the input and output of the system, and whether they are subjective or objective. The video at the bottom of this page explains this in more detail.

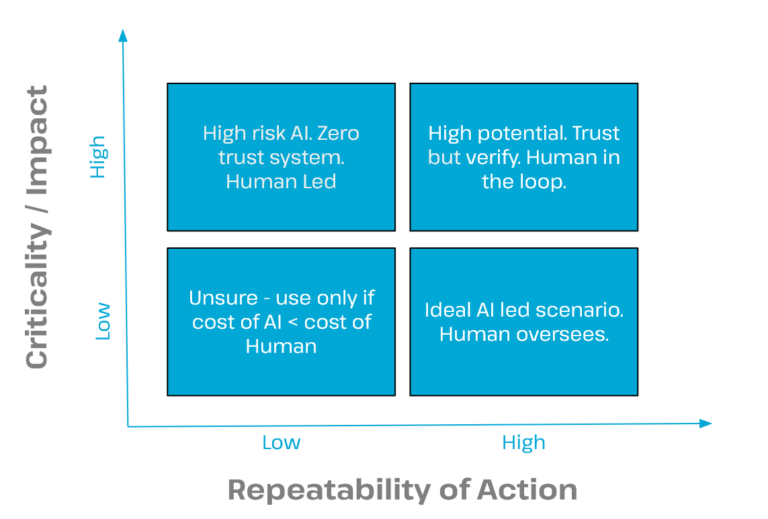

In a mental framework we use to determine when and how to use AI effectively, we look at the repeatability of a task/action, as well as its criticality/impact. Abhi talks in detail about this model in the video.

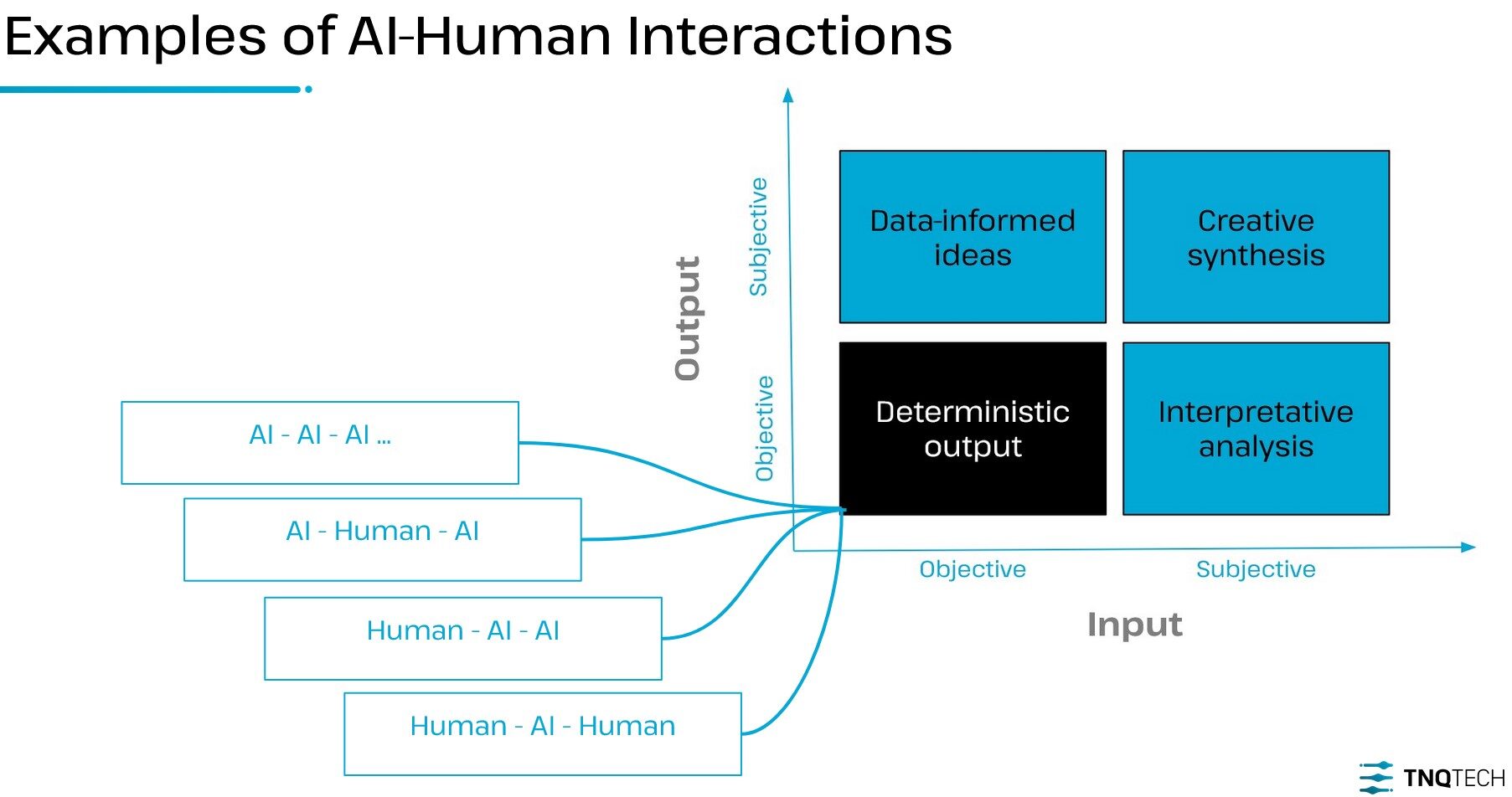

The authors of a multi-disciplinary study in 2023 found two emerging patterns of AI use by human beings and coined the terms Cyborgs and Centaurs.

The Centaur approach involves a clear division of labour, where humans and AI each handle the tasks they are best suited for. In the Cyborg approach, humans and AI work in a deeply integrated manner, with tasks being shared and passed back and forth. Humans can ask an initial research question, and AI can look at the millions of pages of research material it has at its disposal and propose a direction—a process that may take human beings months or years. Depending on the AI output, the human can engage and provide further instruction and keep iterating. This back and forth has the potential of intelligent and insightful outcomes that humans alone will take significantly longer to achieve.

Both these scenarios present a significant risk. Research indicates that when AI works well, it boosts average human performance, which is a significant reason for the excitement around AI. The risk is that when AI performs exceptionally well, human beings may start relying on it blindly, similar in some ways to ‘highway hypnosis’, where drivers on a familiar route zone out and rely on their mental map rather than on actual visual feedback.

When we first began testing our AI models, we noticed that the better the output quality, the more our team relied on these models. When AI performed well consistently, people began to overlook moments when their own intervention was required, allowing significant errors to creep in. Fortunately, we identified this during a pilot and are now looking at deliberate design interventions to address this, to ensure that technology does not come in the way of quality.

Considering multiple parameters, such as the nature of the input, task complexity, criticality, and cost, we can design a workflow that leverages the AI-human interactions opportunistically. Some of the variables in such a workflow can be determined with a few crucial questions: Who triggers the task, human or AI? If AI has triggered a task, can it complete it on its own end-to-end? This is possible, but is mostly not the case. Where exactly is the human-in-the-loop needed? Who validates the completion of the task, human or AI?

In the ideal situation, we should not only know where in the workflow AI will perform better, faster, or cheaper than human beings, we should also know if AI can recognize when to ask humans for help. At TNQTech, we focus on this via error awareness; this is our approach to technology in general, but AI in particular. It enables AI models to prompt for human intervention when unsure of the correctness of their output, based on a predefined confidence score threshold. The human in the loop with the relevant skill is placed at a point in this workflow depending on what kind of intervention is required.

To sum it up, in order to make AI work for optimal outcomes, which in a business context is usually “deterministic output”, human involvement seems necessary. How to use people in combination with AI depends on the need (input/output subjectivity and objectivity) and context of your business. One size does not fit all! We know from our experience, exception handling often requires people with exceptional skills. Asking and answering critical questions will help us create a workflow that balances human and AI contributions effectively.