Just because we can do something doesn’t mean we should—a simple enough statement that we would all agree with. So why is it that we forget this little bit of wisdom when it comes to AI? We tend to lose sight of why we need to use AI in our businesses and forget to ask the essential question—what are we trying to achieve?

People are often waylaid by the notion of automation and will consider it the final goal. When we began to engage with AI as more than just a tool at TNQTech, we realised that automation is not always the best goal.

We should be looking at the efficiency vs the effectiveness of applying automation or AI to something. This wasn’t an easy task to do across our existing and new products because AI is not a silver bullet. It’s bits of silver, if you will, and every scenario demands a slightly different amount of it.

So how do we figure out if and where to use AI? When? And how much?

Before we go any further, I want to acknowledge that there are many factors to consider how we get to these solutions. This is perhaps a simple take, but indulge me for a moment while I draw you a map of what I’ve been using.

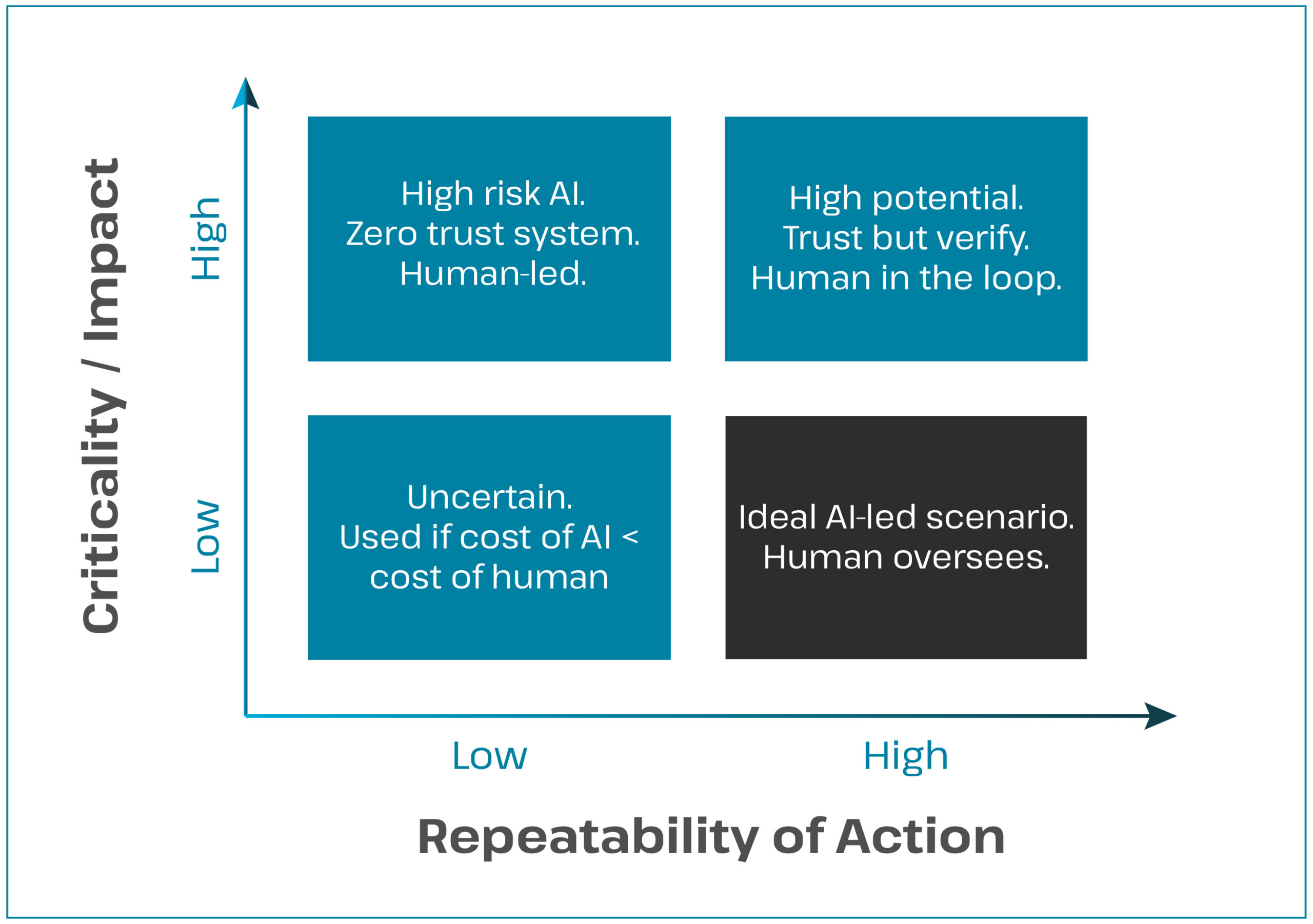

There are multiple parameters to consider but, in my mind, there are two fairly straightforward dimensions that allow for elimination (i.e., where not to consider AI).

1. How critical is the impact or the task or action and how severe will the consequence be if the outcome is inaccurate?

2. Is the task repeatable?

The answers to these two questions can be plotted together to derive a framework that can potentially point us to where to use AI, and what level of human involvement might be needed.

High repeatability means there will be a huge amount of data from where patterns can be determined, which makes it easier to train an AI model. On the other hand, if the task or action isn’t repeatable, then the data available may be sparse and the outcome of the trained system will be subjective and inaccurate.

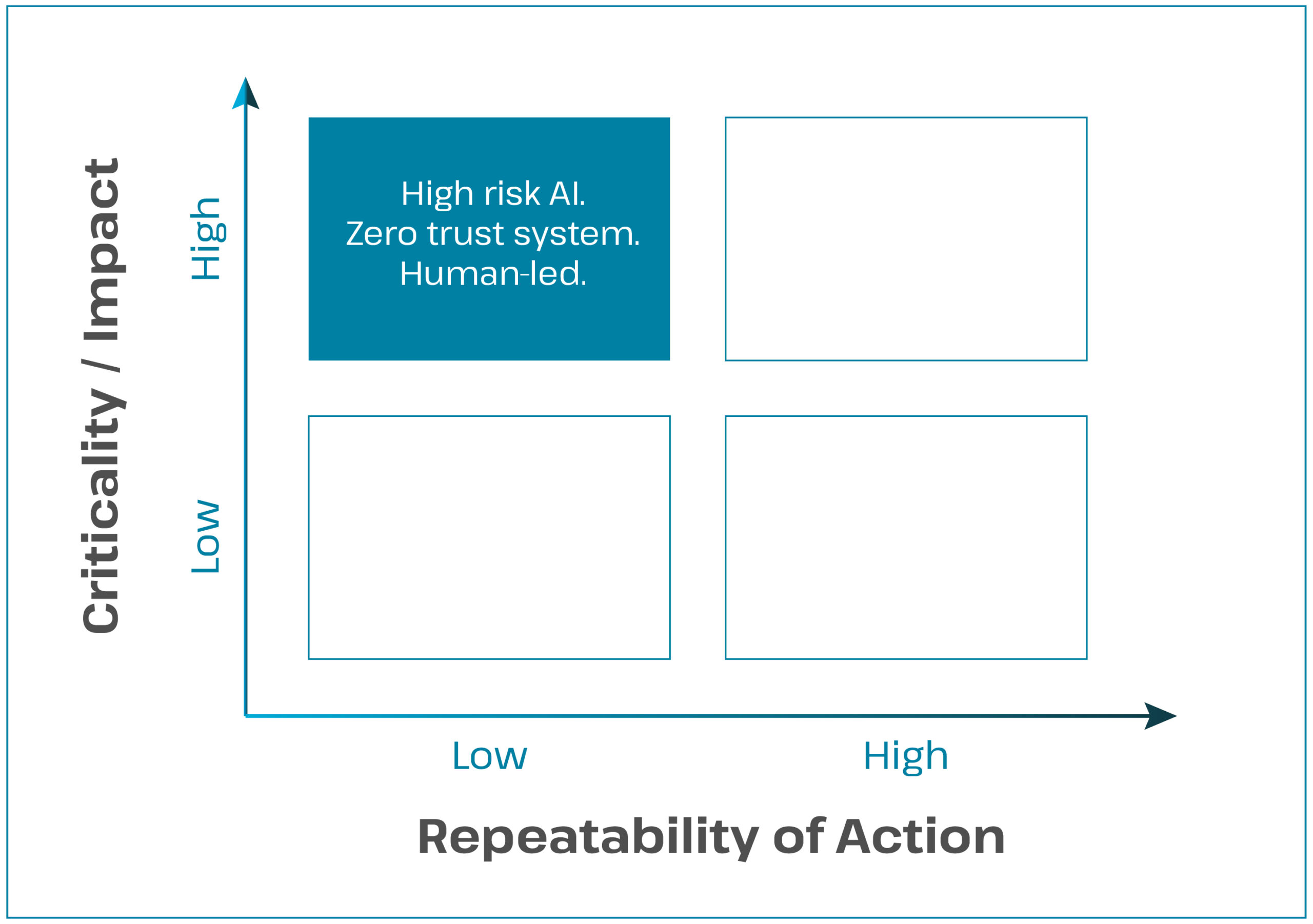

The first scenario is when the repeatability of the task is low, meaning the task doesn’t follow a pattern, and the criticality or impact is high. The use of AI should always be initiated by humans and the outcome must always be reviewed and acted on by humans.

In this case, there must be ZERO TRUST accorded to AI, or it could easily lead to a high risk scenario. An example of this is the process of peer review.

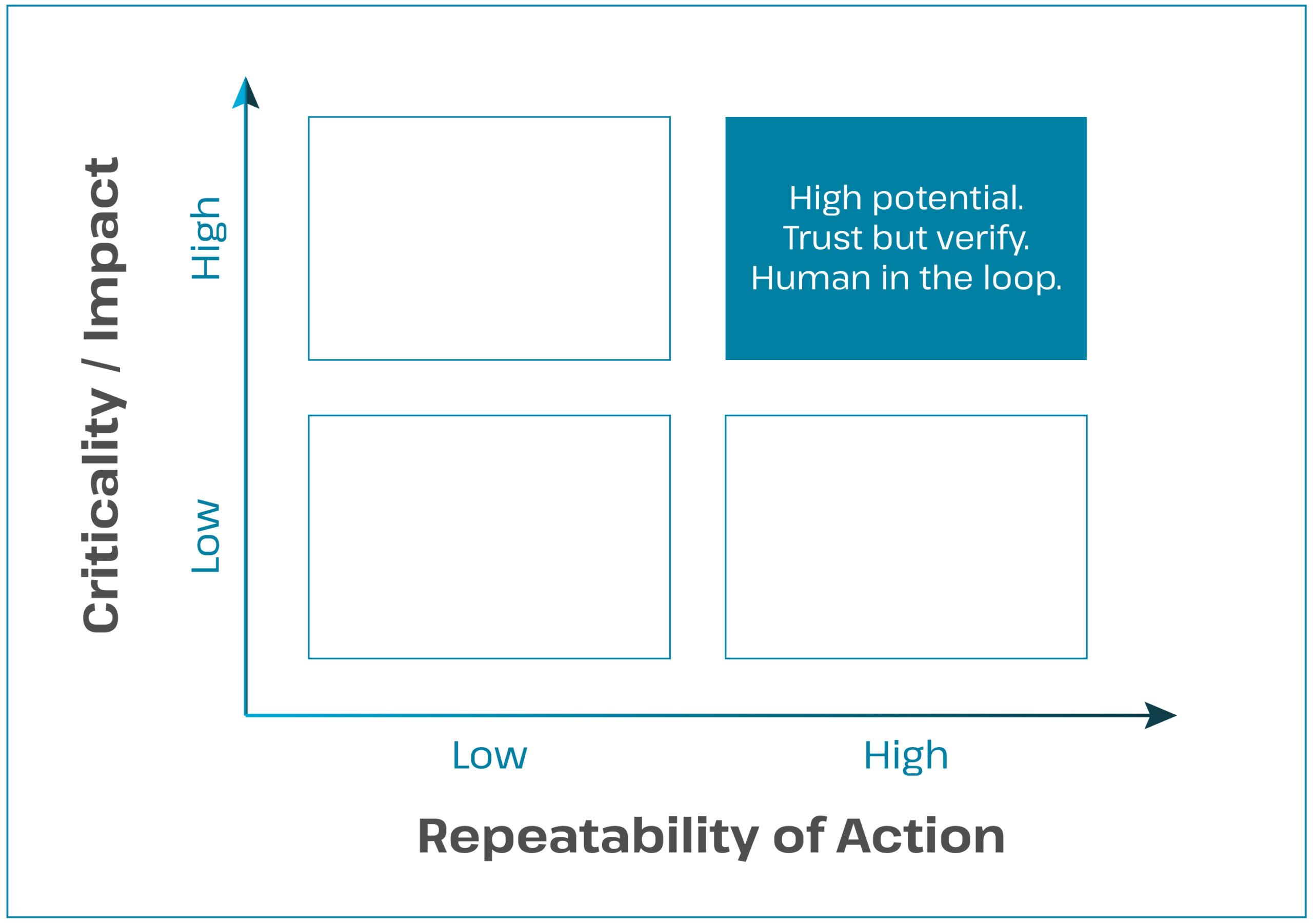

This scenario of the human-AI dance has great potential—the repeatability of the task is high, meaning the task follows a pattern, and the criticality or impact of the outcome is also high. This is a trust-but-verify scenario, where how much of the output you verify will depend on your perception of the criticality and risk to your business. We propose that aspects of research integrity fall under this category—AI is able to predict pattern-based behaviour, but to take action on the output of AI without human oversight is risky.

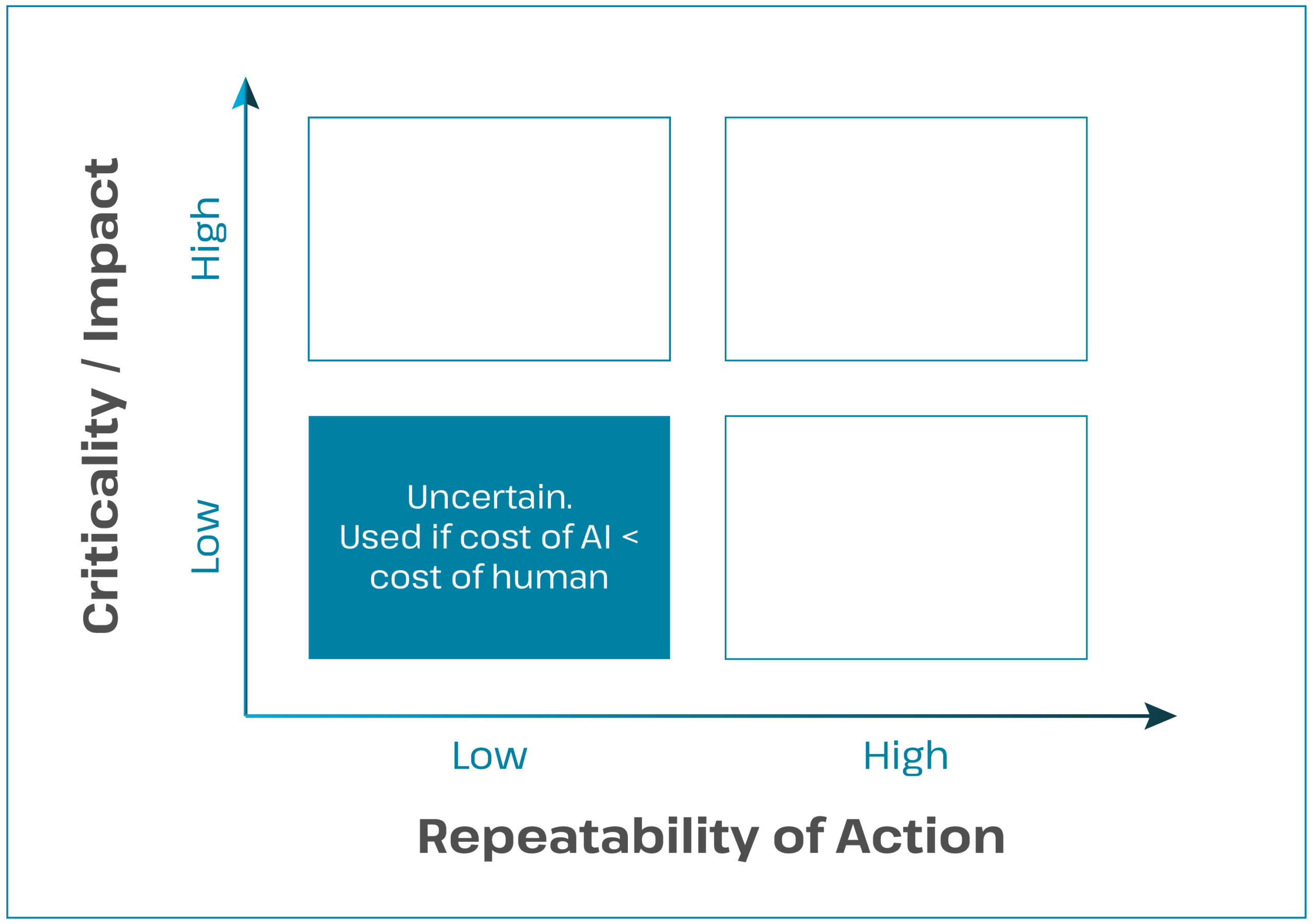

This is a confusing scenario—repeatability of the task is low, meaning the task doesn’t follow a pattern, and the criticality or impact of outcome is also low. Consider using AI only if it is a lot cheaper than humans. It will still require a lot of testing and verification because the exploration of AI itself is an expensive beginning. For the moment, we are happy to ignore anything that falls in this area. We should focus on solving those 20% of problems that will have 80% impact, and it is safe to assume you will not find that 20% in this quadrant.

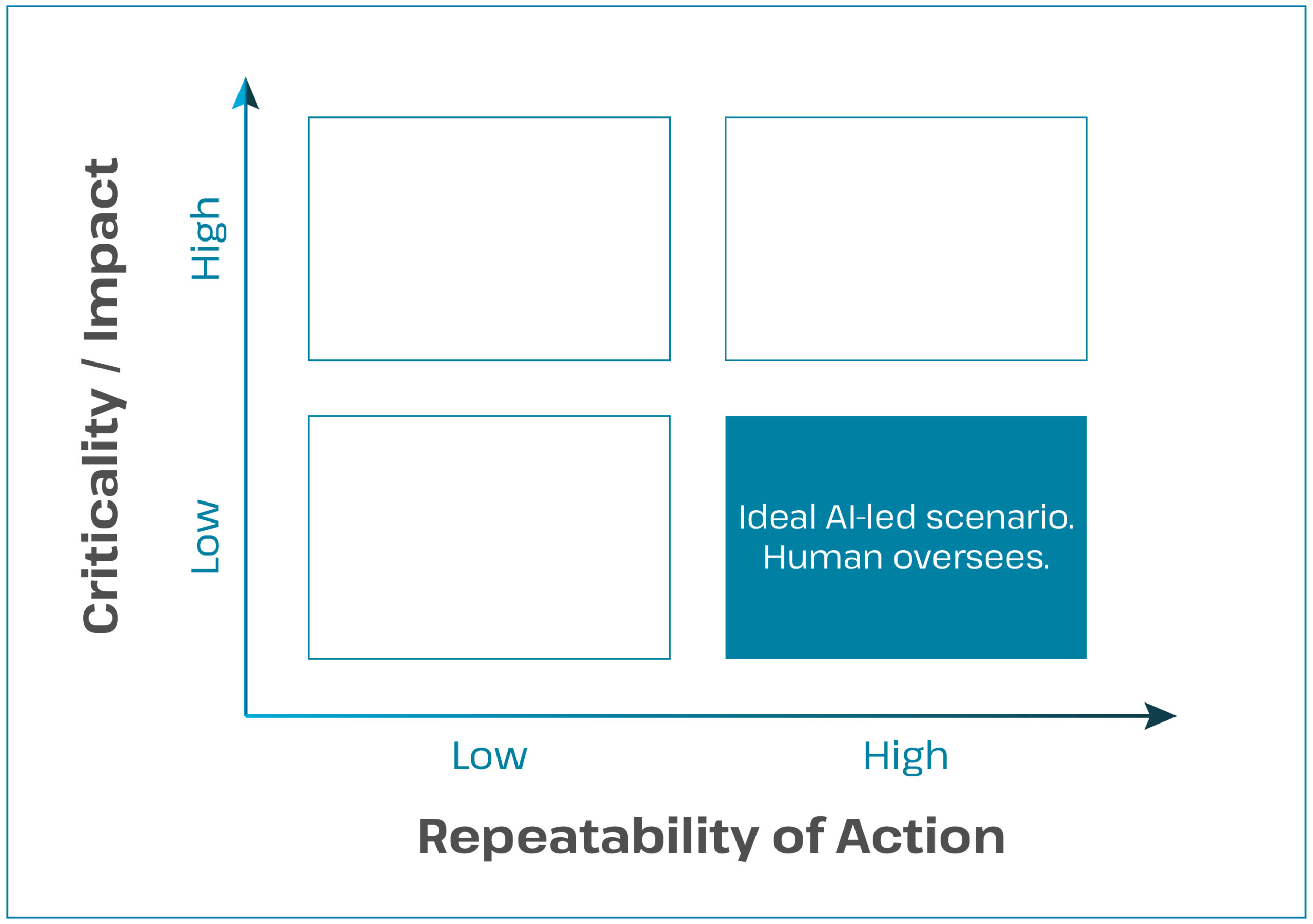

This is the ideal AI-led scenario—repeatability of the task is high, but the criticality or impact of the outcome is relatively low. Here, AI might make mistakes but that’s okay. You could have a trust-but-verify mechanism here too, but on a sample basis. Mechanical copy editing may fall in this category—the impact of capitalising going wrong is perceived, not real, but the impact of a language error changing the meaning of research is very high. Therefore, a distinction between the two is necessary, with humans placed in the middle or end of the workflow. In some cases, upon careful consideration, humans might not be needed at all. This is where AI is complementary to humans.

The repeatability-criticality framework can be a map for businesses to navigate the world of AI and determine which parts of workflows will benefit from artificial intelligence, and which will flourish with human intelligence.

In our world, this is the quadrant we are concentrating on, and we recommend you explore scenarios in your business that fit this quadrant where you are likely to find low hanging fruits: