CDO, R&D

As a tech-driven company serving some of the world’s largest scholarly publishers, we aspire to spearhead advances that disrupt and revolutionise publishing processes.

As part of our ‘Future Technology Vision’, or FTV for short, we took a careful look at the technology that supports our service delivery, and evaluated it against the expectations and evolution of the publishing industry. With innovation, agility, and an unwavering commitment to excellence as our guiding principles, we have been working on redesigning every component of our production technology backbone to be much more efficient, learning all the time, and error-aware.

The pillars that hold up this programme are technology automation, error awareness through the use of machine learning (ML), a data pipeline for continuous learning, and a focus on design centricity. These pillars have stood us in good stead throughout the process of discovering, learning, unlearning, and evolving.

Automation has always been a focal point for our industry, but TNQTech’s approach to it has evolved. It is no longer about specific percentages of automation (which we now see as increasingly moot). Automation should lead to an effective outcome with significantly better quality and efficiency.

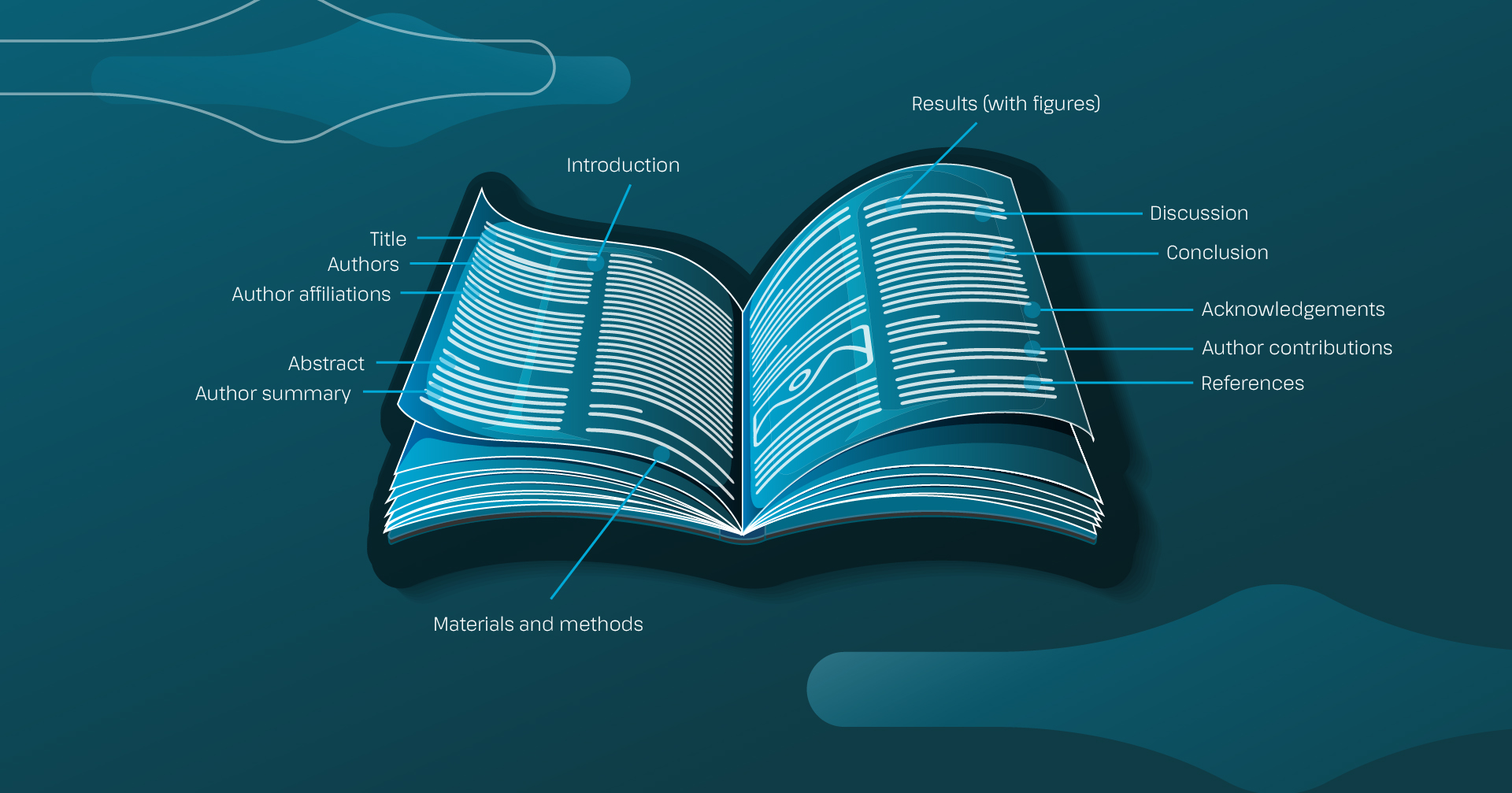

In addition, we don’t view a manuscript as a monolithic unit anymore. We break it down into modular ‘lego block’ components (references, tables, affiliations, citations, etc.) and apply a specific technology to each of them to solve a specific problem, always keeping in mind the desired business outcome.

Over the last few years, we’ve meticulously optimised our tools, especially in areas like structuring and copy editing. By assessing the efficacy of copy editing rules and gradually transitioning to a black box approach, we’ve achieved remarkable results in quality, while offering more meaningful support to our copy editors.

Higher automation doesn’t always lead to efficiency. Identifying errors in an automated system is a needle-in-a-haystack problem that reduces human efficiency. Acknowledging that tools are not infallible and that human intervention remains indispensable for excellent outcomes, we’ve invested two years in developing error-awareness mechanisms targeting granular components of an article. The ML models we’ve used offer predictive confidence in their output that leads to a system that is self-aware and can identify its own errors.

This error-aware ability means the technology/product provides guidance to humans on how to act only where it has failed. This marks a significant leap forward in the design of technology-human interactions, enabling the most efficient use of human intelligence and introducing amplified gains in productivity.

Good decisions and continuous improvement are based on good data. We have a robust data pipeline across all our technology touchpoints. Every user-tool interaction is captured at a granular level. This data source has been invaluable to our Operations and Technology teams, who are now able to make data-informed decisions to identify previously hidden inefficiencies and set them right. All of this microdata is fed into a visual dashboard – a powerful component that is helping us move beyond mere automation and focus on optimal outcomes that enable continuous improvement in our products and user behaviour.

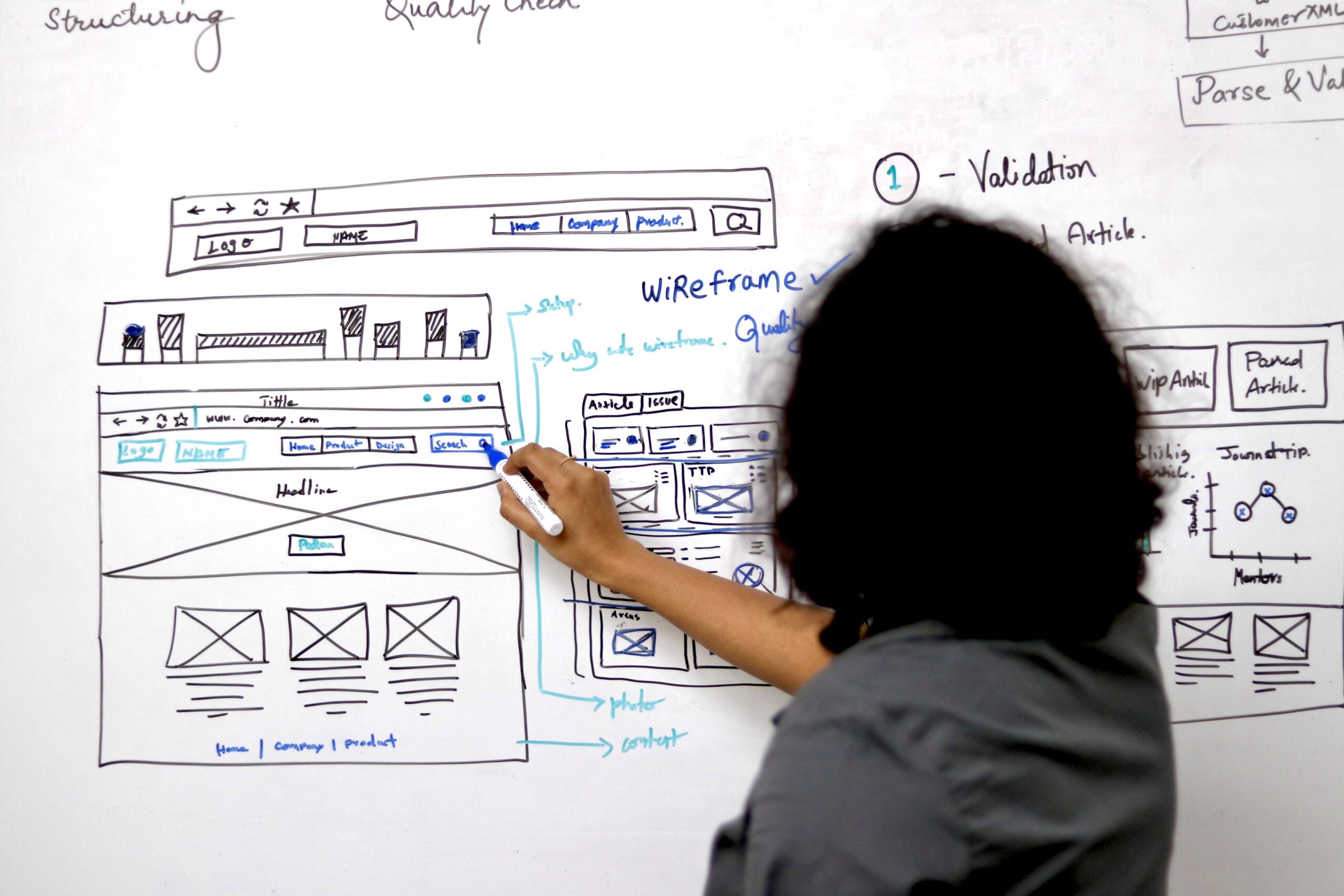

The fourth pillar introduces a holistic approach to design across our products and tools. Solid design isn’t just about pretty interfaces, it’s about identifying and solving problems in a manner that ensures its users have the most seamless, meaningful, and useful experiences. The error-awareness pillar has created a significant shift in the way we work, warranting a change in the behaviour of our users and the need to manage this change efficiently. Our design approach has been reshaped to facilitate this transition. We now draw up solutions based on data from user-tool interaction, the psychology and behaviour of our users, and the factors in our surrounding business environment to achieve the most optimal outcomes possible.

Our goal with the FTV programme is to surpass current industry benchmarks and set new ones as we make progress on this path. We aim to simultaneously achieve faster turnaround, improved quality, and cost efficiency. This programme signifies a truly transformative journey that is allowing us to maximise outcomes by using human acumen intelligently and at precise points.

Using machine learning models, we are making a stark departure from the one-size-fits-all pattern and embracing tailored approaches to meet specific journal, book, and publisher needs.

We’re after a delicate equilibrium that achieves everything we’ve set out to attain, and this can only be made possible through open dialogue with our customers and leaders in publishing.